Now, I show the plots with main statistics I get with the data I have been using in the previous posts, but don`t forget they are obtained with a simple math treatment like SNV combined with Detrend and with PLS models. In the next posts we will see if we get an improvement using derivatives or some other algorithms apart from the PLS.

There are cases, like the Total Carbon, where we can see some non linearity, than we can maybe handle with ANN, using logs, or some other methods, but in this case we have not enough samples to develop an ANN model, so we will see.

There are some parameters like "Sand" where the performance is poor, but we will see if using derivatives could be improved.

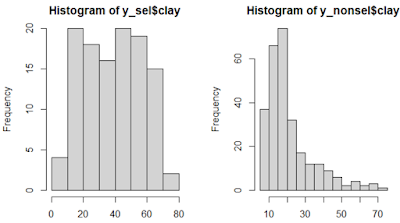

Sure we can get improvements in Clay, Silt and Total Carbon, due that we have a good range.