One important point before develop a calibration is to know the

laboratory error. This error, known as SEL (Standard Error Laboratory).

This error change depending of

the product, because it can be very homogenous or heterogeneous, so in the

first case the lab error is lower than in the second case.

In the case of meat meal the

error are higher than for other products and this is a case where I am working

these days and I want to share in this posts.

A certain number of samples

(n) well homogenized had been divided into two or four subsamples and had been

send to a laboratory for the Dumas Protein analysis. After receive the results,

a formula used for this case, based on the standard deviation of the subsamples

and in the number of samples, gives the laboratory error for protein in meat

meal.

The result is 1,3 . Probably

some of you can think that this is a high value, but really, the meat meal

product is quite complex and I think is normal.

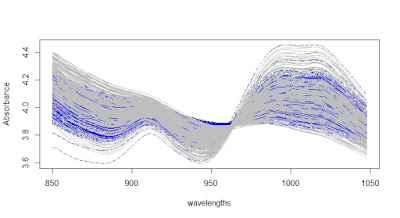

Every subsample that went to

the lab has been analyze in a NIR, and the spectra of the subsamples studied

apart with the statistic RMS that shows that the samples were well homogenized.

Now I have the option to

average the predictions of the NIR predictions (case 1), or to average the

spectra of the subsamples, predict it with the model and get the predicted

result (case 2). I use in this case the option 1 and plot a residual plot with

the residuals of the average predictions subtracted from the lab average value:

Blue line is +/- “ 1.SEL”,

yellow +/- “ 2.SEL” and red +/- “ 3.SEL”.